Machine Learning in Computer Vision

1. Representation Learning/Distance Metric Learning

Large-scale Distance Metric Learning with Uncertainty.[CVPR’18] pdf

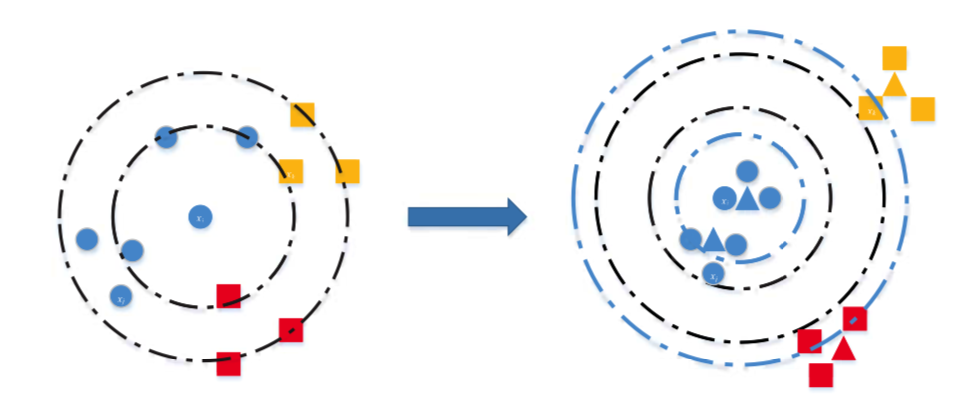

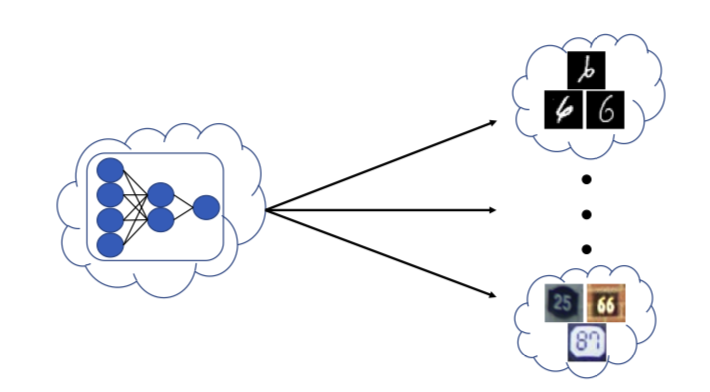

We show that representations can be optimized with triplets defined on multiple centers of each class rather than original examples.

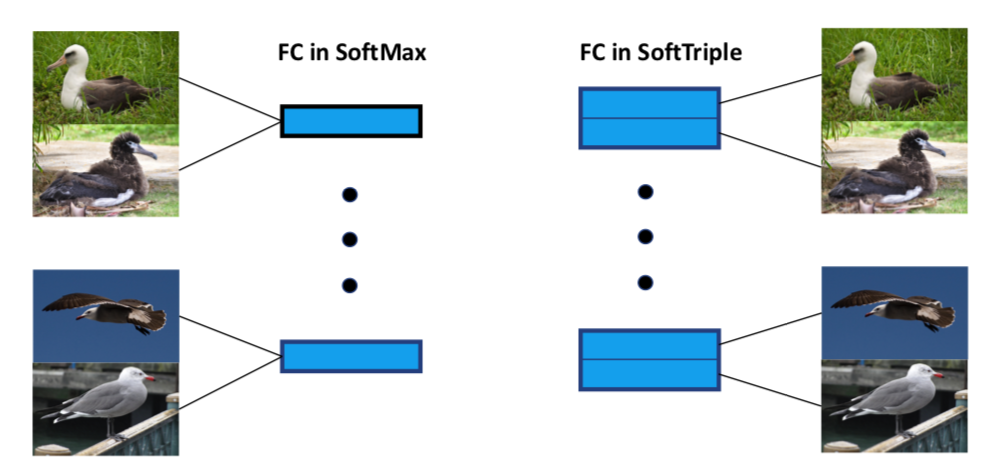

SoftTriple Loss: Deep Metric Learning Without Triplet Sampling.[ICCV’19] pdf code

We show that the conventional cross entropy loss with normalized softmax operator is equivalent to a triplet loss defined on proxies from classes. Based on the analysis, an improved loss encoding multiple proxies for each class is proposed.

Hierarchically Robust Representation Learning.[CVPR’20] pdf resource

We propose an algorithm to balance the performance of different classes in the source domain for pre-training. A balanced model can be better for fine-tuning on a different domain.

2. Learning with Limited Supervision

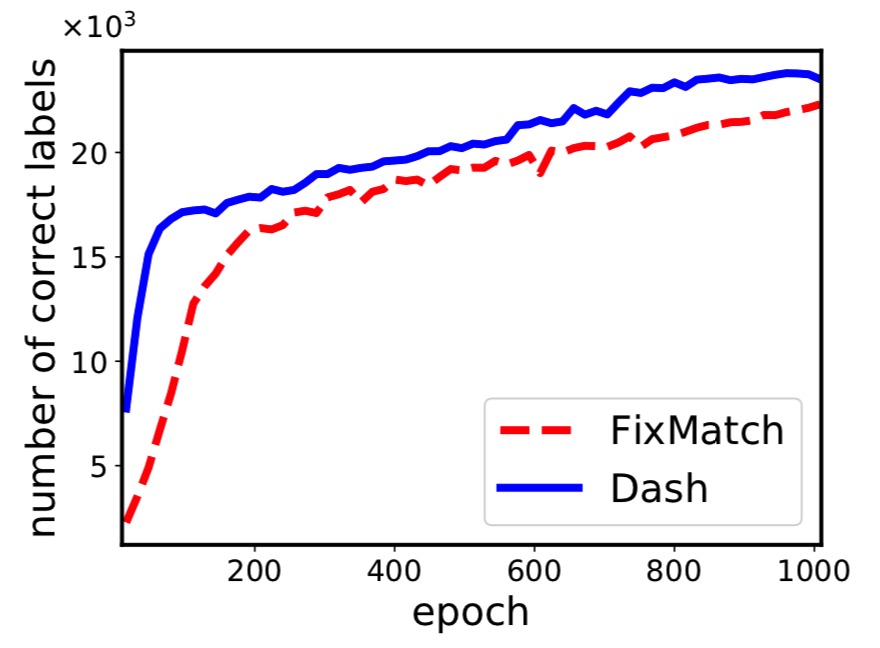

Dash: Semi-Supervised Learning with Dynamic Thresholding.[ICML’21] pdf

We propose a novel thresholding strategy to pick the appropriate unlabeled data for semi-supervised learning.

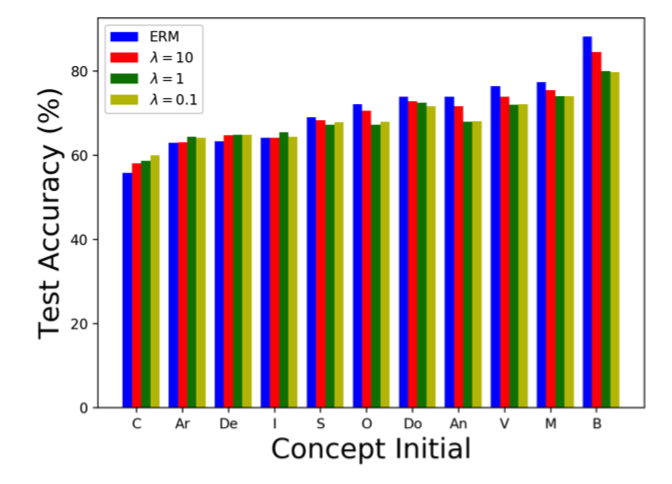

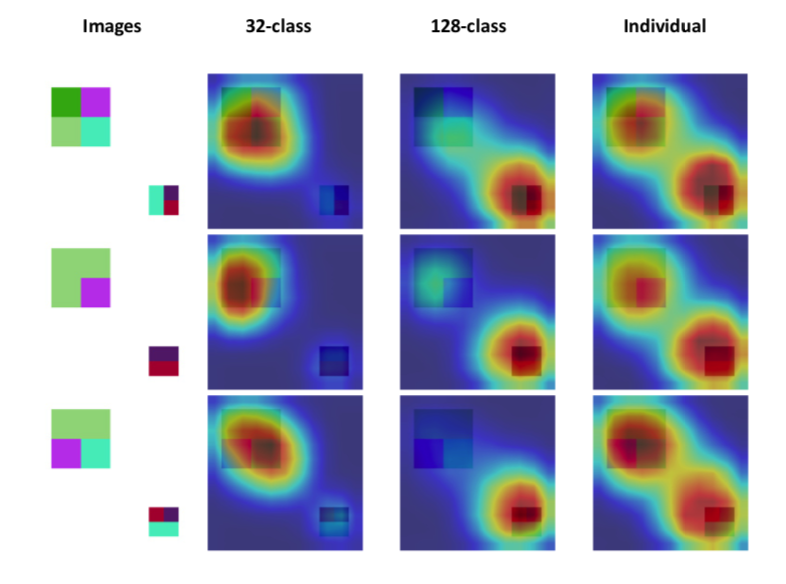

Weakly Supervised Representation Learning with Coarse Labels.[ICCV’21] pdf code

We show that representations learned by deep learning is closely related to the training task. With coarse labels only, it is possible to approach the performance with full supervision.

3. Robust Optimization

Robust Optimization over Multiple Domains.[AAAI’19] pdf

Given examples from multiple domains, we propose an algorithm to optimize the worst domain effectively.

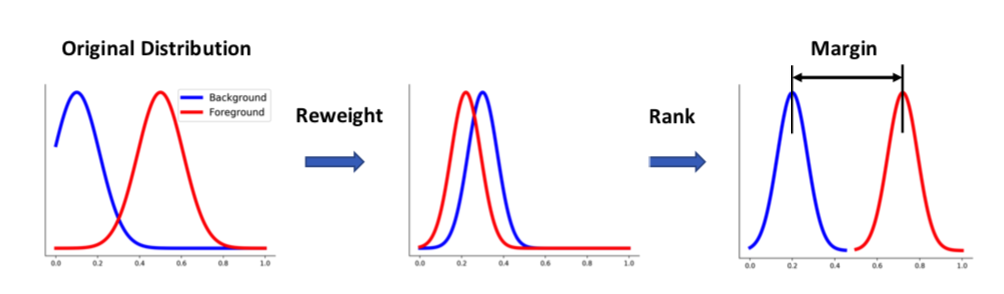

DR Loss: Improving Object Detection by Distributional Ranking.[CVPR’20]. pdf code

To tackle the imbalance issue in object detection, we convert the classification problem to a ranking problem and propose a novel loss accordingly.